Reshaping the Future of AI

In the ever-evolving landscape of artificial intelligence, one technology stands out for its exceptional prowess in understanding and processing sequences of data – Recurrent Neural Networks (RNNs). These powerful networks have revolutionized various fields, from natural language processing to time series analysis. In this article, we discuss sequence data processing using RNNs, exploring their architecture, applications, and impact on the AI landscape.

Sequences are the Heartbeat of Data

Sequences are everywhere. They form the heartbeat of data in various domains such as natural language, speech, music, and more. Unlike traditional feedforward neural networks that process data in isolation, RNNs excel at capturing patterns and dependencies within sequences, making them a cornerstone in AI advancements.

The RNN Architecture

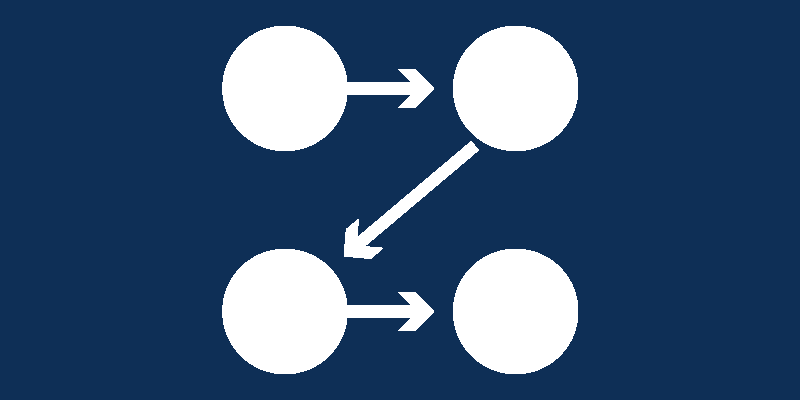

At the core of RNNs lies their recurrent nature. This unique architecture allows them to remember and utilize information from previous time steps, enabling them to understand the sequential context. The three main components of an RNN are:

- Input Layer: Receives input data at each time step

- Hidden Layer: Stores the information from the previous time step and the current input. It acts as a memory unit, retaining context

- Output Layer: Produces the prediction or output for the current time step

Vanishing and Exploding Gradient Problem

While RNNs can process sequences, they face challenges in training. One major issue is the vanishing gradient problem, where gradients become exceedingly small as they are backpropagated through time. This hampers the learning process, especially in long sequences. The exploding gradient problem is the opposite, where gradients become extremely large.

Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU)

To address these problems, advanced RNN architectures like LSTM and GRU were introduced. LSTMs employ memory cells and gating mechanisms to selectively store and retrieve information. GRUs, on the other hand, combine memory and hidden state, simplifying the architecture while retaining the ability to capture long-range dependencies.

Applications of Sequence Data Processing

- Natural Language Processing (NLP): RNNs have transformed language understanding and generation. They power machine translation, sentiment analysis, and language modeling

- Speech Recognition and Synthesis: RNNs enable the conversion of spoken language into text and vice versa, giving rise to voice assistants and automatic speech recognition systems

- Music Generation: RNNs can generate music by learning patterns and compositions from existing musical sequences

- Time Series Analysis: Financial predictions, weather forecasting, and predicting stock prices are all powered by RNNs’ ability to understand time-dependent data

Challenges and Future Directions

While RNNs have revolutionized sequence data processing, they are not without challenges. Training RNNs on long sequences can still be computationally expensive, and their performance can degrade in the presence of noisy or incomplete data. Researchers continue to explore enhancements to RNN architectures and training techniques, seeking to overcome these limitations.

In the field of AI, Recurrent Neural Networks play a pivotal role in understanding and processing the intricate details of sequence data. Their architecture, designed to remember context across time steps, has paved the way for remarkable advancements in fields like NLP, speech recognition, and time series analysis. As we navigate new applications of AI, RNNs remain at the forefront, orchestrating the harmonious understanding of sequences and reshaping the future of technology.