A Crucial Step for Accurate Models

In the world of machine learning, where algorithms unravel patterns and predictions, the process of model training begins with a fundamental step: splitting data into training and testing sets. This simple yet pivotal step lays the foundation for building robust and accurate predictive models. In this guide, we will delve into the nuances of data splitting, understand its significance, and explore strategies to optimize this crucial stage of machine learning model development.

Generalization and Evaluation

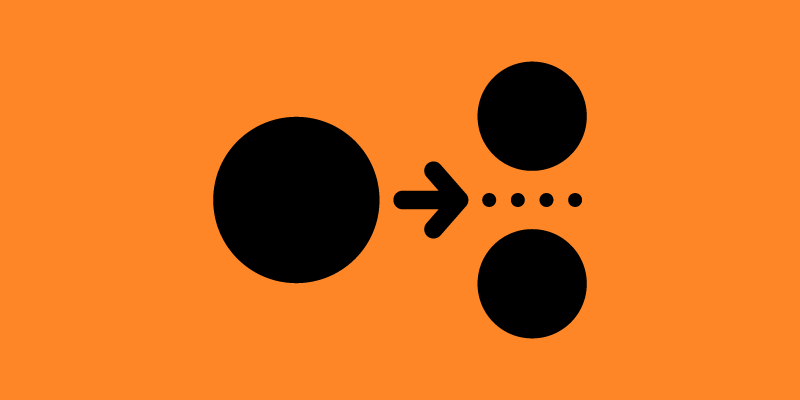

At the heart of data splitting lies the essence of generalization and evaluation. The primary goal is to ensure that the model learns patterns from a distinct set of data and subsequently validates its predictive capabilities on unseen data. By separating the dataset into two segments – the training set and the testing set – you enable the model to learn from one and evaluate its performance on the other.

Training Set versus Testing Set

The training set is akin to a schoolroom for your model. It’s the data on which the model hones its predictive skills. By exposing the model to numerous examples, it learns the underlying relationships, captures patterns, and adjusts its parameters to minimize the prediction error.

Once the model has undergone rigorous training, it’s time to assess its competence on unseen data. The testing set serves as a litmus test – a critical evaluation of how well the model can generalize its learnings to new instances. This evaluation not only measures the model’s performance but also unveils potential pitfalls, like overfitting or underfitting, that might arise during real-world predictions.

Strategies for Splitting Data

While the principle of data splitting is clear, the strategy for achieving this balance requires careful consideration. Let’s explore some commonly used techniques:

- Random Splitting: This method randomly divides the dataset into training and testing sets. While simple and intuitive, it may not be suitable for cases where data has inherent patterns or temporal dependencies

- Stratified Splitting: Stratified splitting maintains the class distribution across both sets, ensuring that both training and testing sets represent the original data’s diversity

- Time-Based Splitting: For temporal data, time-based splitting maintains the chronology of data. This approach is essential when predicting future events using historical data

The Role of Data Size and Cross Validation

The size of your dataset plays a significant role in data splitting. In cases of limited data, a common strategy is to allocate a larger portion to the training set to ensure the model’s exposure to diverse examples. Conversely, in scenarios of ample data, a more balanced splitting ratio might suffice.

While a single data split provides insights into the model’s performance, it might not be representative of its generalization capabilities. This is where cross-validation comes into play. Techniques like k-fold cross-validation divide the dataset into multiple subsets, training and testing the model on different combinations to obtain a more robust evaluation of its performance.

Data Imbalance and Validation Sets

Data imbalance, where one class is significantly more prevalent than others, can skew the model’s performance evaluation. In such cases, using techniques like stratified sampling during data splitting helps maintain class distribution in both sets, preventing the model from favoring the majority class.

As machine learning advances, so does the sophistication of data splitting. The introduction of a validation set, a subset of the training data, enables you to monitor the model’s progress during training. This set serves as an intermediary between training and testing, aiding in early detection of overfitting and allowing for timely adjustments.

Fine-Tuning the Splits

Even within data splitting, there’s room for optimization. Hyperparameters like the random seed value can influence the data split’s outcome. By fine-tuning these hyperparameters, you can ensure reproducibility and reliability in your experiments.

Building a Solid Foundation for Model Development

In the evolving landscape of machine learning, data splitting remains a critical juncture that significantly impacts model performance and generalization. By thoughtfully allocating data to training and testing sets, you provide your model with the opportunity to learn and validate its predictions accurately. Through various strategies and cross-validation techniques, you enhance your model’s robustness and reliability. With the right data splitting strategy in place, you’re laying a solid foundation for your model’s journey towards precision, accuracy, and real-world applicability.